When Were The Game Of Thrones Books Released Model Card for OLMo 7B For transformers versions v4 40 0 or newer we suggest using OLMo 7B HF instead OLMo is a series of O pen L anguage Mo dels designed to enable the science of

To obtain the official LLaMA 2 weights please see the Obtaining and using the Facebook LLaMA 2 model section There is also a large selection of pre quantized gguf models available on Nov 26 2024 nbsp 0183 32 Note Instruct version of OLMo 2 1B This model is best used for chat applications

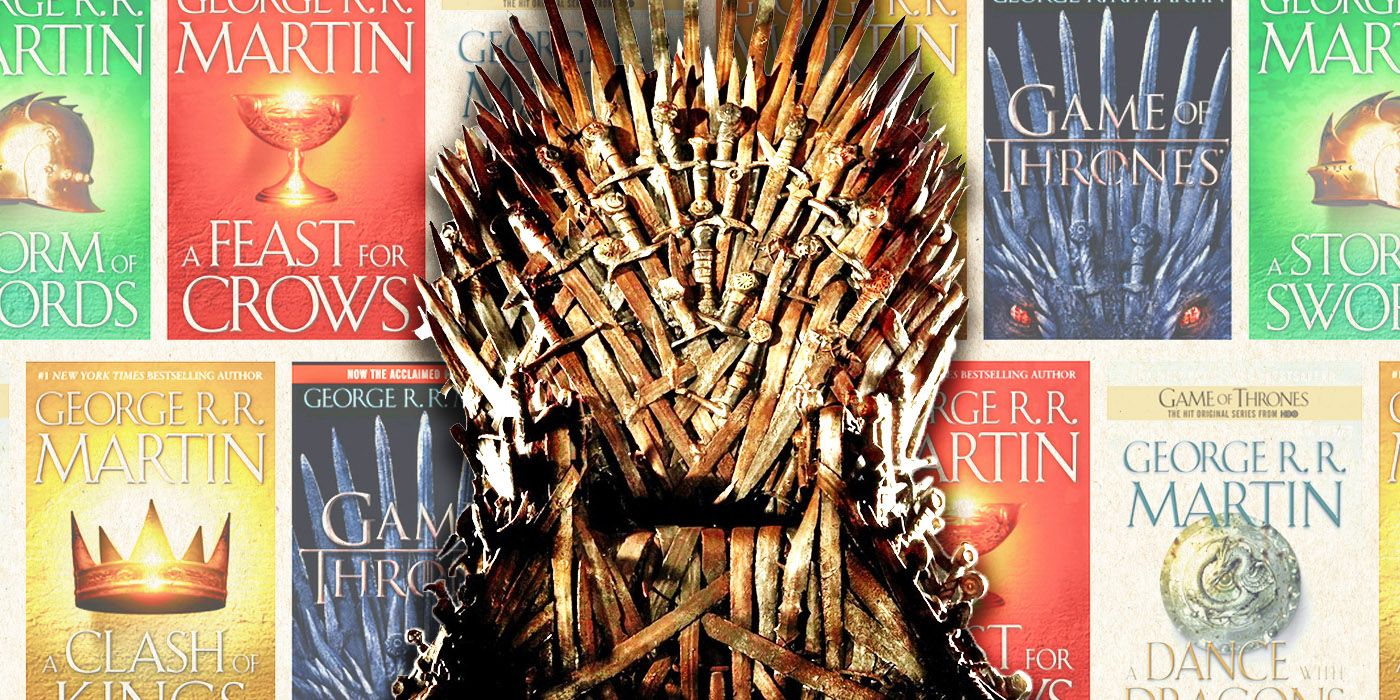

When Were The Game Of Thrones Books Released

When Were The Game Of Thrones Books Released

https://static1.cbrimages.com/wordpress/wp-content/uploads/2024/04/game-of-thrones-books.jpg

Brewers Opening Day Sweet 16 Stephen A s RIGHT The Bill Michaels

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=831337865769469&get_thumbnail=1

Seminole Headlines 6 3 25 FSU Football FSU Baseball Warchant TV

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=493346383842755&get_thumbnail=1

Model Card for OLMo 7B Instruct OLMo is a series of O pen L anguage Mo dels designed to enable the science of language models The OLMo base models are trained on the Dolma OLMo 2 1124 13B Instruct NOTE 1 3 2025 UPDATE Upon the initial release of OLMo 2 models we realized the post trained models did not share the pre tokenization logic that the base

Nov 27 2024 nbsp 0183 32 Model Card for OLMo 2 13B We introduce OLMo 2 a new family of 7B and 13B models trained on up to 5T tokens These models are on par with or better than equivalently Nov 26 2024 nbsp 0183 32 Model Details Model Card for OLMo 2 7B We introduce OLMo 2 a new family of 7B and 13B models featuring a 9 point increase in MMLU among other evaluation

More picture related to When Were The Game Of Thrones Books Released

The Zone Friday 6 20 25 The Zone With Jason Anderson Sterling

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=612914044806306&get_thumbnail=1

Dragon Size Chart

https://i.ytimg.com/vi/txdxbpKqT7E/maxresdefault.jpg

A Game Of Thrones Books

https://www.greenandturquoise.com/wp-content/uploads/2022/12/George-R.R.-Martin-Books-In-Order-Game-of-Thrones.jpg

OLMo 2 1124 13B Instruct OLMo 2 13B Instruct November 2024 is post trained variant of the OLMo 2 13B November 2024 model which has undergone supervised finetuning on an OLMo It is used to instantiate an OLMo2 model according to the specified arguments defining the model architecture Instantiating a configuration with the defaults will yield a similar configuration to

Nov 26 2024 nbsp 0183 32 OLMo 2 1124 7B DPO OLMo 2 7B DPO November 2024 is post trained variant of the OLMo 2 7B November 2024 model which has undergone supervised finetuning on an Check out the OLMo 2 paper forthcoming or T 252 lu 3 paper for more details This reward model was used to initialize value models during RLVR training for both 7B and 13B RLVR training

Pin On Hotd daemon

https://i.pinimg.com/originals/9f/87/2c/9f872cb1b5292425118c015c10086f0d.jpg

WATCH LIVE Breakfast With David Will WATCH LIVE Breakfast With

https://lookaside.fbsbx.com/lookaside/crawler/media/?media_id=660155643605730&get_thumbnail=1

When Were The Game Of Thrones Books Released - OLMo 2 1124 13B Instruct NOTE 1 3 2025 UPDATE Upon the initial release of OLMo 2 models we realized the post trained models did not share the pre tokenization logic that the base