Pyspark Join Two Dataframes On Multiple Conditions Jul 12 2017 nbsp 0183 32 PySpark How to fillna values in dataframe for specific columns Asked 7 years 11 months ago Modified 6 years 2 months ago Viewed 200k times

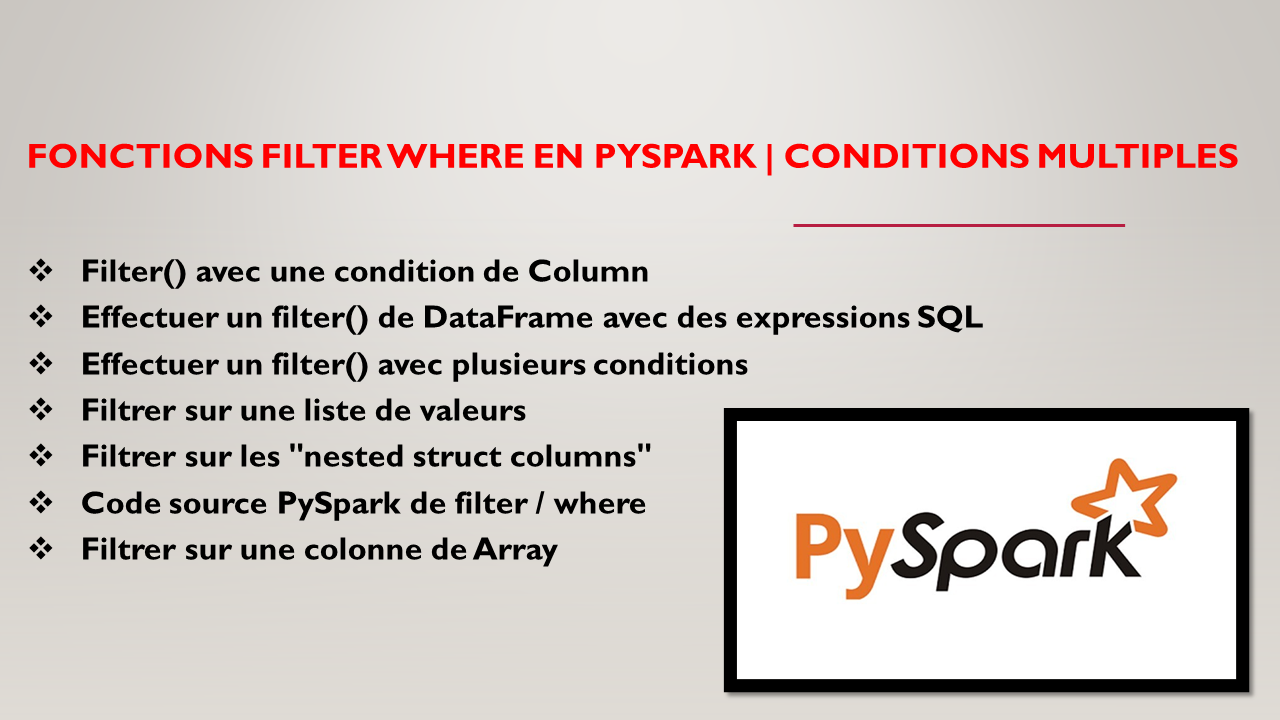

105 pyspark sql functions when takes a Boolean Column as its condition When using PySpark it s often useful to think quot Column Expression quot when you read quot Column quot Logical operations on Nov 4 2016 nbsp 0183 32 I am trying to filter a dataframe in pyspark using a list I want to either filter based on the list or include only those records with a value in the list My code below does not work

Pyspark Join Two Dataframes On Multiple Conditions

Pyspark Join Two Dataframes On Multiple Conditions

https://i.ytimg.com/vi/-7Ab8BmkLNc/maxresdefault.jpg

PySpark SQL Left Outer Join With Example Spark By 53 OFF

https://sparkbyexamples.com/wp-content/uploads/2021/10/Fonctions-filter-in-pyspark.png

Pyspark Left Join Two Dataframes With Diffe Column Names Infoupdate

https://cdn.educba.com/academy/wp-content/uploads/2022/09/PySpark-Join-on-Multiple-Columns.jpg

Jun 8 2016 nbsp 0183 32 when in pyspark multiple conditions can be built using amp for and and for or Note In pyspark t is important to enclose every expressions within parenthesis that combine I have a pyspark dataframe consisting of one column called json where each row is a unicode string of json I d like to parse each row and return a new dataframe where each row is the

With pyspark dataframe how do you do the equivalent of Pandas df col unique I want to list out all the unique values in a pyspark dataframe column Not the SQL type way Jul 13 2015 nbsp 0183 32 I am using Spark 1 3 1 PySpark and I have generated a table using a SQL query I now have an object that is a DataFrame I want to export this DataFrame object I have called

More picture related to Pyspark Join Two Dataframes On Multiple Conditions

PySpark Dataframes

https://media.geeksforgeeks.org/wp-content/uploads/20210626142044/Screenshotfrom20210626142024.png

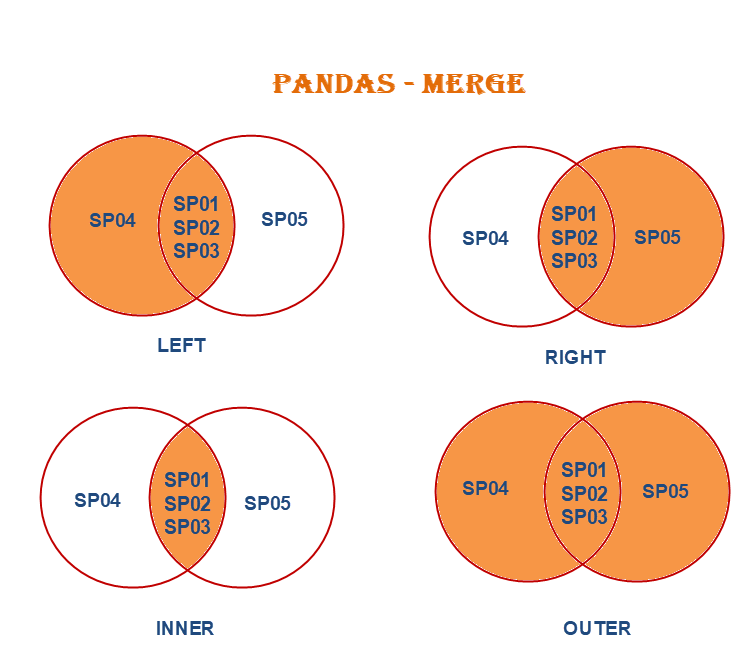

Pandas Merge

https://devskrol.com/wp-content/uploads/2020/10/mERGE.png

Pandas Merge

https://files.realpython.com/media/Merging-Joining-and-Concatenating-in-Pandas_Watermarked_1.e45698a508a4.jpg

Mar 21 2018 nbsp 0183 32 In pyspark how do you add concat a string to a column Asked 7 years 3 months ago Modified 2 years 1 month ago Viewed 132k times I come from pandas background and am used to reading data from CSV files into a dataframe and then simply changing the column names to something useful using the simple command

[desc-10] [desc-11]

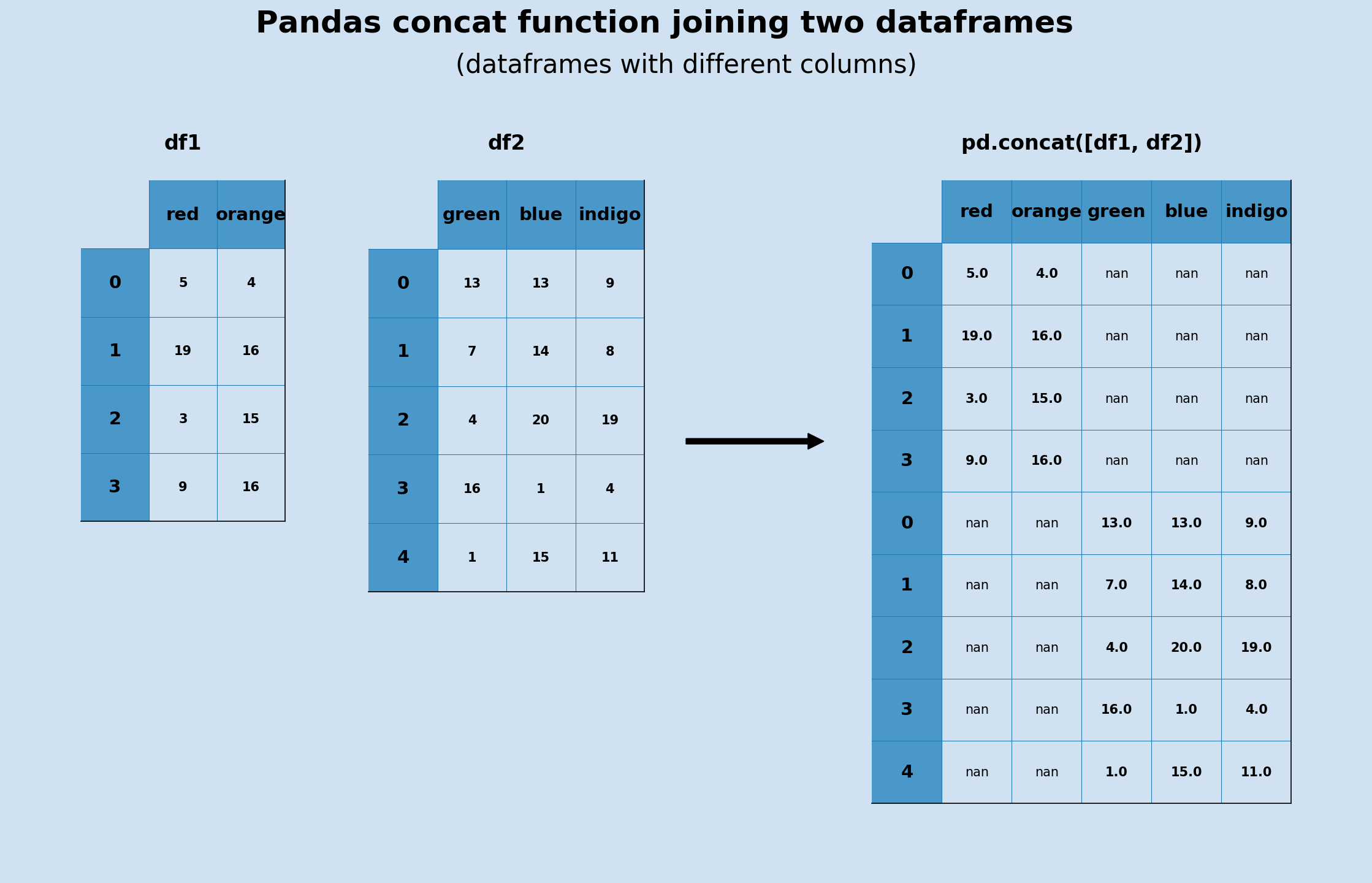

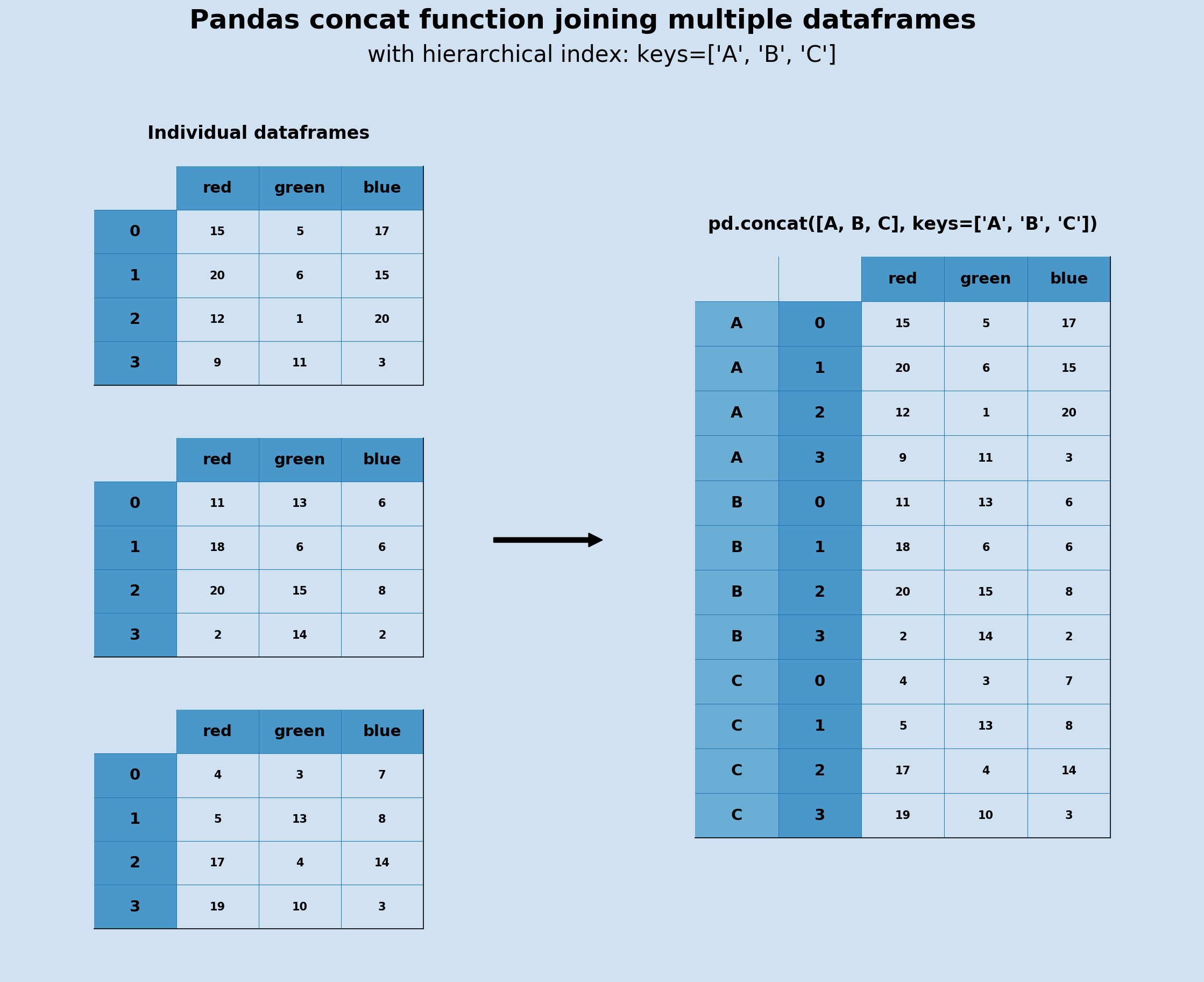

Pandas Dataframe

https://swdevnotes.com/images/python/2020/1004/pandas_concat_dataframes.png

MySQL MariaDB And SQL Joins Mastering Concatenation Successful Array

https://swdevnotes.com/images/python/2020/1004/pandas_concat_multiple_dataframes_with_keys.png

Pyspark Join Two Dataframes On Multiple Conditions - Jun 8 2016 nbsp 0183 32 when in pyspark multiple conditions can be built using amp for and and for or Note In pyspark t is important to enclose every expressions within parenthesis that combine