Linear Regression Data Analysis attention linear layer QKV 38

f x ax b An equation written as f x C is called linear if f

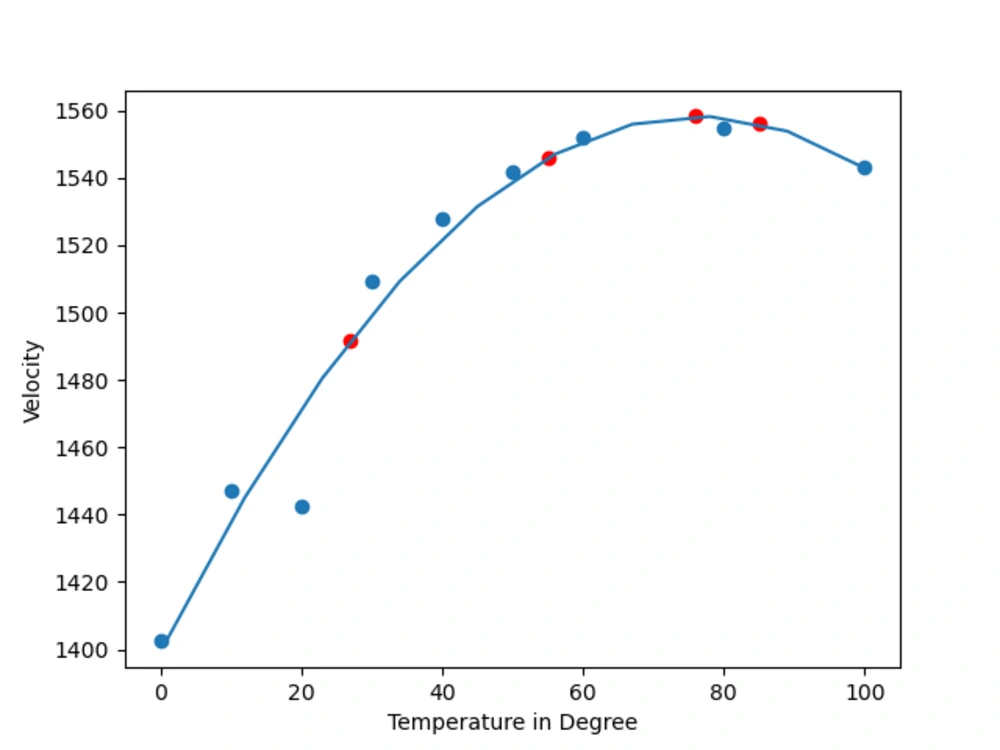

Linear Regression Data Analysis

Linear Regression Data Analysis

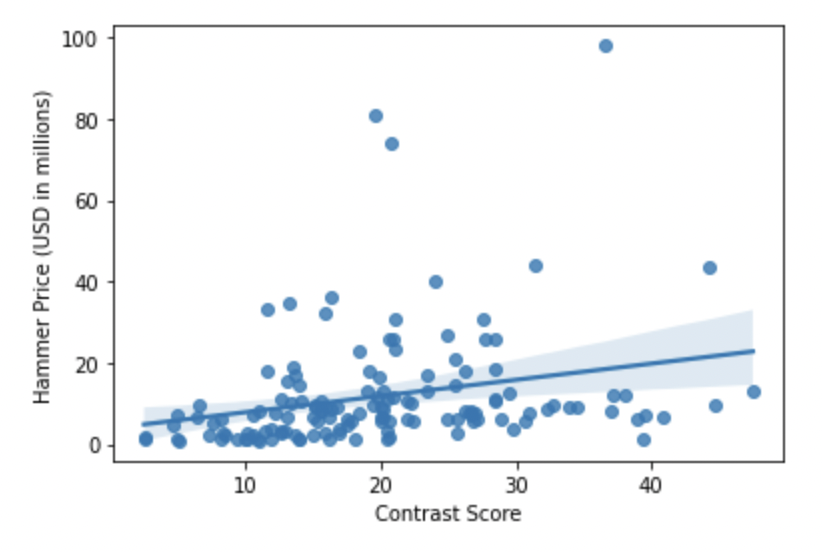

https://user-images.githubusercontent.com/104586192/184948419-5e9d61d9-7d0d-4f07-a2b1-822c5f258cab.png

GitHub Cameron M Bailey Linear Regression Data Analysis Basquiat

https://user-images.githubusercontent.com/104586192/184947991-0923cbe9-9a59-4c39-9bcb-bbd6231d8cf5.png

GitHub Cameron M Bailey Linear Regression Data Analysis Basquiat

https://user-images.githubusercontent.com/104586192/184963006-73e06533-42ff-4752-98e5-892956befc9d.png

Log linear Attention softmax attention token KV Cache linear attention type theory type system Linear type sub structural type big picture sub structural type sub structural logic linear

Sep 22 2020 nbsp 0183 32 Introduction to Linear Algebra Linear Algebra Done Right 9 0

More picture related to Linear Regression Data Analysis

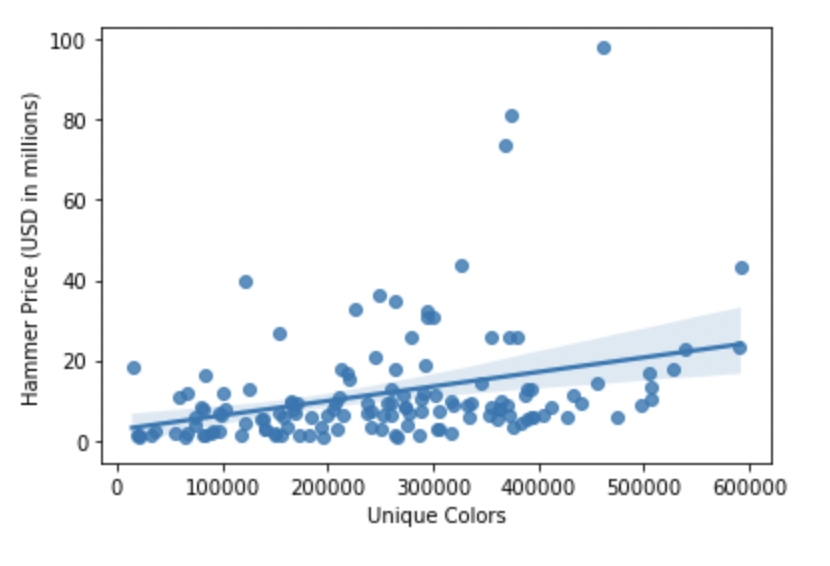

GitHub Cameron M Bailey Linear Regression Data Analysis Basquiat

https://user-images.githubusercontent.com/104586192/184962895-120fe941-5dc4-4420-b7d3-0309b38b9fe0.png

GitHub Cameron M Bailey Linear Regression Data Analysis Basquiat

https://user-images.githubusercontent.com/104586192/184940640-cd4467f4-9d91-4a3c-bebd-a4bc1cb03983.jpeg

Linear Regression Data Analysis With Visuals Upwork

https://res.cloudinary.com/upwork-cloud/image/upload/c_scale,w_1000/v1709150140/catalog/1601221522902024192/llzt7snddxfe8m8zzzxd.webp

Introduction to Linear Algebra Gilbert Strang Introduction to Linear Algebra Step by step tutorial for linear fitting 6 with errors Origin

[desc-10] [desc-11]

Linear Regression Data Analysis With Visuals Upwork

https://res.cloudinary.com/upwork-cloud/image/upload/c_scale,w_1000/v1709150139/catalog/1601221522902024192/nyhw1gzoxvsepi3hng08.webp

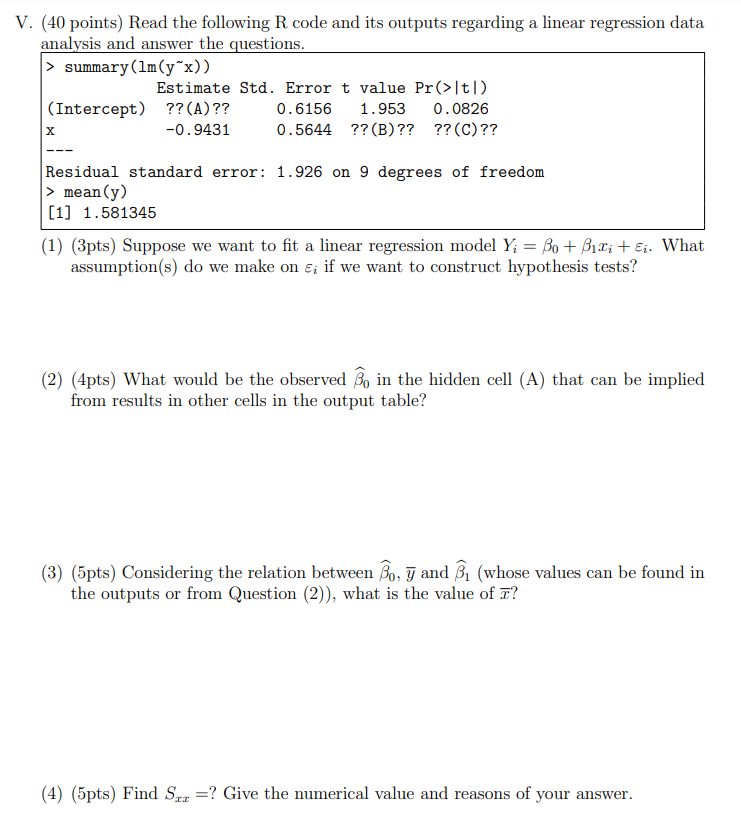

Solved 40 Points Read The Following R Code And Its Outputs Chegg

https://media.cheggcdn.com/media/754/7540edd3-58f6-4629-a258-e78e7d264a46/phpNA1GTA

Linear Regression Data Analysis - [desc-12]